Abstract#

We present a novel contextual combinatorial bandit approach for automated negotiation in multi-agent settings. Our method addresses the challenge of learning effective negotiation strategies when the opponent’s behavior and preferences are unknown.

Introduction#

Automated negotiation is a fundamental problem in multi-agent systems, with applications ranging from resource allocation to market trading. Traditional approaches often assume perfect information about opponent preferences, which is unrealistic in many real-world scenarios.

Problem Formulation#

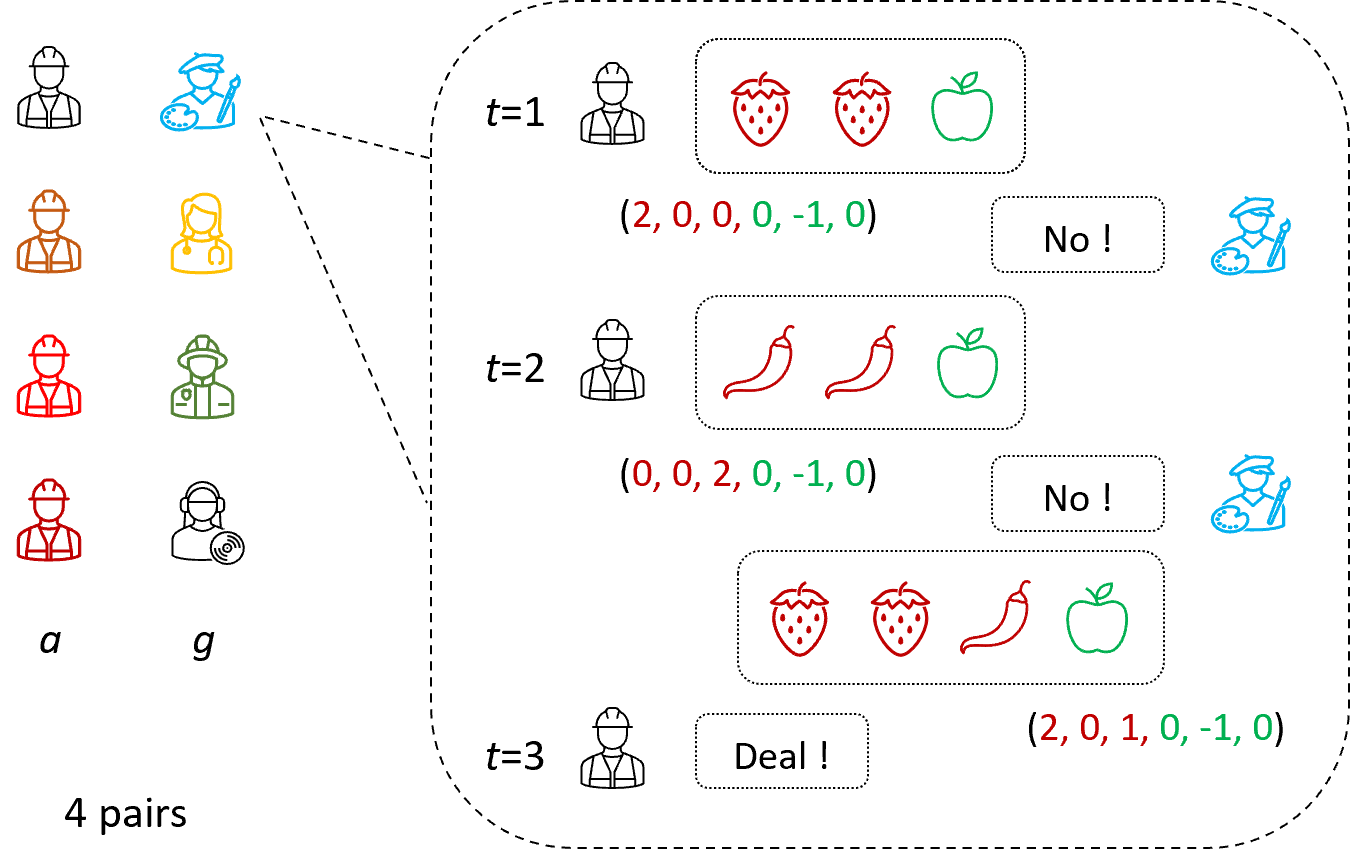

We formalize the negotiation problem as a contextual combinatorial bandit where:

- Actions: Negotiation offers (combinations of items/terms)

- Context: Current negotiation state and opponent behavior history

- Rewards: Negotiation outcomes (acceptance/rejection and utility)

Method#

Contextual Combinatorial Bandit Framework#

Our approach extends the combinatorial bandit setting to incorporate:

- Context-aware Policy: Learns to adapt strategies based on negotiation context

- Combinatorial Action Space: Handles complex offer structures

- Online Learning: Adapts to opponent behavior during negotiation

Algorithm Design#

The core algorithm follows these steps:

- Context Extraction: Extract relevant features from current negotiation state

- Offer Generation: Use upper confidence bounds to select promising offers

- Response Learning: Update model based on opponent’s response

- Strategy Adaptation: Refine negotiation strategy over time

Theoretical Analysis#

We provide regret bounds for our algorithm, showing:

- Sublinear Regret: O(√T log T) regret with respect to optimal strategy

- Context Adaptation: Improved bounds when context is informative

- Combinatorial Efficiency: Polynomial dependence on action space complexity

Experimental Results#

Simulated Environments#

- Bilateral Negotiation: Consistent improvements over baseline strategies

- Multi-issue Negotiation: Effective handling of complex negotiation spaces

- Opponent Adaptation: Quick adaptation to different opponent types

Real-world Application#

- Online Marketplace: Improved success rates in automated trading

- Resource Allocation: Better outcomes in distributed resource sharing

Key Contributions#

- Novel Formulation: First to cast negotiation as contextual combinatorial bandit

- Theoretical Guarantees: Rigorous analysis with regret bounds

- Practical Algorithm: Efficient implementation with strong empirical performance

- Broad Applicability: Demonstrated effectiveness across multiple domains

Related Work#

Our work builds upon:

- Combinatorial bandits literature

- Game-theoretic approaches to negotiation

- Online learning in multi-agent systems

Conclusion#

We have presented a principled approach to automated negotiation using contextual combinatorial bandits. Our method provides both theoretical guarantees and practical performance improvements, opening new directions for research in multi-agent learning.

Citation#

@inproceedings{li2024negotiation,

title={A Contextual Combinatorial Bandit Approach to Negotiation},

author={Li, Yexin and Mu, Zhancun and Qi, Siyuan},

booktitle={International Conference on Machine Learning},

year={2024}

}